Understanding Gemini AI

Gemini AI, developed by Google DeepMind, is a multimodal AI system launched in late 2023 and continuously upgraded since then. Unlike earlier AI models that focused mainly on text or images, Gemini is designed to handle multiple modalities simultaneously—including text, images, audio, code, and even 3D spatial data.

For 3D modeling, this means users can:

- Describe objects or environments in natural language and get 3D models generated automatically.

- Convert 2D images or sketches into fully textured 3D assets.

- Optimize and scale models for gaming engines like Unity and Unreal Engine.

- Use Gemini as part of a workflow assistant, guiding designers with suggestions and corrections.

This makes Gemini AI not just a tool but an intelligent partner in digital creation.

Why 3D Modeling Needs AI

Traditional 3D modeling is time-consuming and requires manual work in software like Blender, Maya, 3ds Max, or Cinema4D. Even with advanced tools, building realistic models involves multiple steps:

- Concept design – sketches or references.

- Polygonal modeling or sculpting – defining shapes.

- Texturing & shading – adding materials and colors.

- Rigging & animation – making objects move.

- Lighting & rendering – producing final visuals.

For industries like gaming or film, this process can take weeks per asset. AI steps in to accelerate or even automate these tasks. Gemini AI can generate base meshes, suggest textures, and refine details—allowing artists to focus on creativity instead of repetitive technical work.

Gemini AI Trend in 3D Modeling

The adoption of Gemini AI in 3D modeling follows several key trends:

1. Text-to-3D Generation

Users can type commands like:

“Create a futuristic motorcycle with neon lights and aerodynamic curves”

and Gemini generates a usable 3D model instantly.

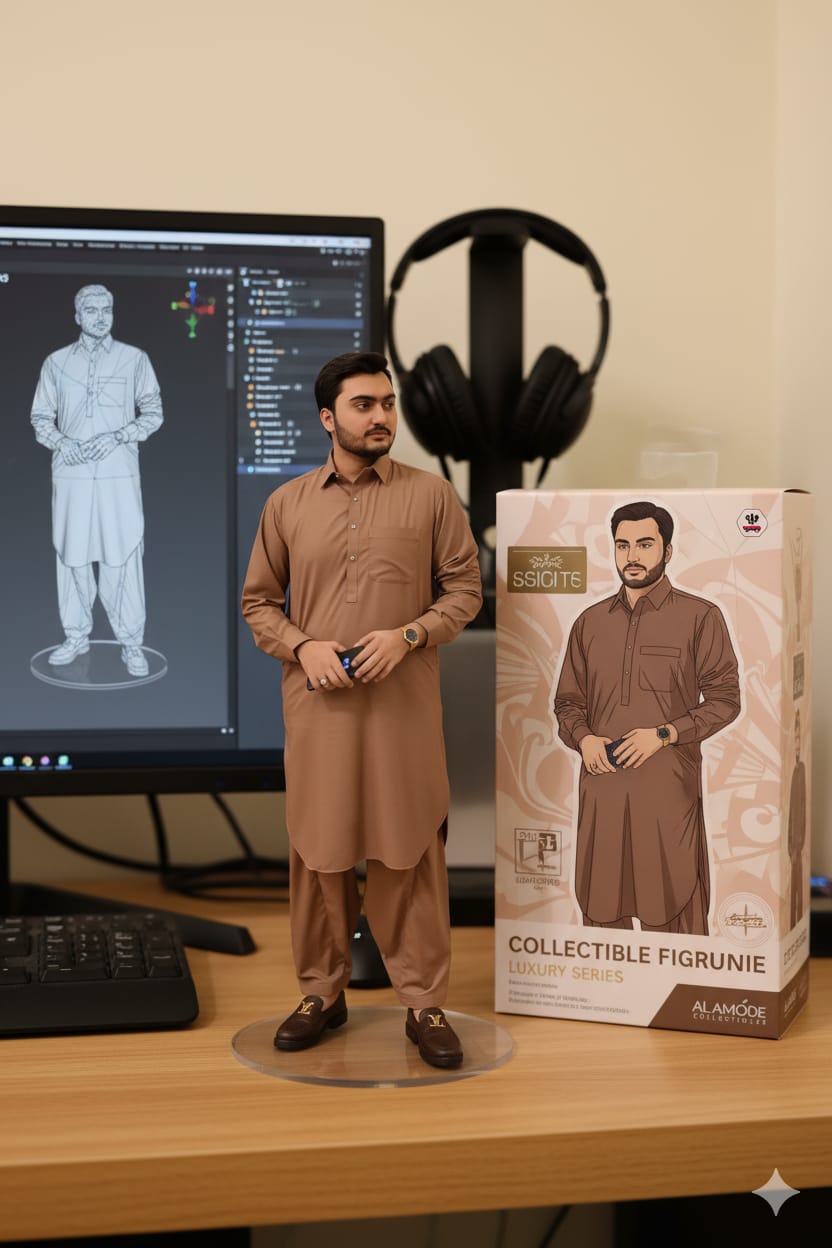

2. 2D-to-3D Conversion

Designers can upload a single image or a sketch, and Gemini transforms it into a three-dimensional object. This trend is popular in fashion, product design, and architecture.

3. Real-time Collaboration

Gemini integrates with cloud-based workflows, enabling multiple team members to co-create, modify, and refine 3D models simultaneously.

4. Procedural World Building

Instead of manually constructing game worlds, Gemini AI helps generate entire environments—forests, cities, or sci-fi planets—based on prompts.

5. Hyper-realistic Textures

Through multimodal training, Gemini can produce photo-realistic textures that adapt automatically to lighting conditions in engines.

6. Accessibility and Democratization

AI-powered 3D modeling lowers the entry barrier. Small businesses, indie developers, and even students can now create assets without needing expensive software expertise.

The Gemini AI 3D Modeling Process

To understand how Gemini AI changes workflows, let’s break down the step-by-step process.

Step 1: Input & Prompt Engineering

The process begins with a prompt, which could be:

- A text description (e.g., “Design a medieval sword with golden engravings”).

- A reference image (a sketch or concept art).

- A combination (text + image + scene description).

Gemini interprets the input using its multimodal understanding.

Step 2: Base Mesh Generation

Gemini creates a low-poly base mesh, which serves as the foundation of the 3D model. Unlike traditional manual polygon modeling, AI can generate accurate proportions instantly.

Step 3: Detail Sculpting

AI refines the shape by adding high-resolution details like curves, engravings, or organic textures. This stage mimics what an artist would normally do in sculpting software like ZBrush.

Step 4: Texturing and Materials

Using its trained datasets, Gemini applies textures, shaders, and materials that adapt realistically to light. It can automatically create wood, metal, glass, or fabric finishes.

Step 5: Rigging (Optional)

For characters or objects requiring animation, Gemini can auto-generate skeletal rigs so models are ready for movement in real-time engines.

Step 6: Optimization

The AI simplifies meshes for use in VR/AR or gaming without losing detail, ensuring performance efficiency.

Step 7: Export and Integration

Finally, models are exported in formats like FBX, OBJ, GLTF, ready to be imported into Blender, Maya, Unity, or Unreal Engine.

Applications of Gemini AI in 3D Modeling

🎮 Gaming Industry

- Fast generation of characters, weapons, environments.

- Indie developers can compete with AAA studios by saving time and costs.

🎥 Film & Animation

- Storyboarding with AI-generated 3D assets.

- Creating realistic CGI backgrounds without huge production teams.

🏛 Architecture & Interior Design

- Converting 2D blueprints into walkthrough-ready 3D spaces.

- Quick visualization of design variations.

🛍 E-commerce & Marketing

- 3D product displays for AR try-ons.

- Virtual stores with Gemini-generated 3D catalogs.

🏭 Manufacturing & Prototyping

- Digital twins of machinery for predictive maintenance.

- Rapid prototyping of industrial designs.

🩺 Healthcare

- Medical simulations in 3D.

- Anatomy models for education and VR surgery training.

Prompt:

Benefits of Gemini AI in 3D Modeling

- Speed – Reduces weeks of work into minutes.

- Cost-effectiveness – Lowers dependency on large design teams.

- Creativity boost – Artists focus on innovation, not repetitive tasks.

- Scalability – Easy to generate thousands of assets for large projects.

- Accessibility – Makes 3D modeling available to non-experts.

Challenges and Limitations

While promising, AI-driven 3D modeling faces hurdles:

- Quality control – AI sometimes generates inaccurate proportions.

- Originality – Risk of outputs resembling existing copyrighted designs.

- Data bias – Training datasets may limit diversity in design.

- Ethical concerns – Replacement of human artists in some industries.

- Technical compatibility – Models may require cleanup before final use.

The Future of 3D Modeling with Gemini AI

The next decade will likely see Gemini AI evolve into an industry standard for 3D workflows. Some predicted directions include:

- Fully automated world generation for the Metaverse.

- Voice-controlled modeling where creators speak and AI builds in real time.

- Integration with AR glasses for hands-free design in physical spaces.

- AI-human hybrid studios where teams guide AI while it handles production.

- Marketplace of AI-generated assets, reducing cost of 3D content creation worldwide.

Conclusion

The Gemini AI trend in 3D modeling represents a paradigm shift in how digital assets are created, refined, and deployed. Instead of being limited by manual processes, designers now have access to a multimodal AI assistant that accelerates creativity, democratizes 3D modeling, and opens doors to new industries.

From gaming and film to architecture and healthcare, Gemini AI is shaping the future of how we interact with digital environments. While challenges remain in terms of originality, ethics, and technical fine-tuning, the trajectory is clear: 3D modeling powered by Gemini AI is the foundation of tomorrow’s virtual and physical worlds.